\(\newcommand{\bmu}{\boldsymbol{\mu}}\) \(\newcommand{\bSigma}{\boldsymbol{\Sigma}}\) \(\newcommand{\bfbeta}{\boldsymbol{\beta}}\) \(\newcommand{\bflambda}{\boldsymbol{\lambda}}\) \(\newcommand{\bgamma}{\boldsymbol{\gamma}}\) \(\newcommand{\bsigma}{{\boldsymbol{\sigma}}}\) \(\newcommand{\bpi}{\boldsymbol{\pi}}\) \(\newcommand{\btheta}{{\boldsymbol{\theta}}}\) \(\newcommand{\bphi}{\boldsymbol{\phi}}\) \(\newcommand{\balpha}{\boldsymbol{\alpha}}\) \(\newcommand{\blambda}{\boldsymbol{\lambda}}\) \(\renewcommand{\P}{\mathbb{P}}\) \(\newcommand{\E}{\mathbb{E}}\) \(\newcommand{\indep}{\perp\!\!\!\perp} \newcommand{\bx}{\mathbf{x}}\) \(\newcommand{\bp}{\mathbf{p}}\) \(\renewcommand{\bx}{\mathbf{x}}\) \(\newcommand{\bX}{\mathbf{X}}\) \(\newcommand{\by}{\mathbf{y}}\) \(\newcommand{\bY}{\mathbf{Y}}\) \(\newcommand{\bz}{\mathbf{z}}\) \(\newcommand{\bZ}{\mathbf{Z}}\) \(\newcommand{\bw}{\mathbf{w}}\) \(\newcommand{\bW}{\mathbf{W}}\) \(\newcommand{\bv}{\mathbf{v}}\) \(\newcommand{\bV}{\mathbf{V}}\) \(\newcommand{\bfg}{\mathbf{g}}\) \(\newcommand{\bfh}{\mathbf{h}}\) \(\newcommand{\horz}{\rule[.5ex]{2.5ex}{0.5pt}}\) \(\renewcommand{\S}{\mathcal{S}}\) \(\newcommand{\X}{\mathcal{X}}\) \(\newcommand{\var}{\mathrm{Var}}\) \(\newcommand{\pa}{\mathrm{pa}}\) \(\newcommand{\Z}{\mathcal{Z}}\) \(\newcommand{\bh}{\mathbf{h}}\) \(\newcommand{\bb}{\mathbf{b}}\) \(\newcommand{\bc}{\mathbf{c}}\) \(\newcommand{\cE}{\mathcal{E}}\) \(\newcommand{\cP}{\mathcal{P}}\) \(\newcommand{\bbeta}{\boldsymbol{\beta}}\) \(\newcommand{\bLambda}{\boldsymbol{\Lambda}}\) \(\newcommand{\cov}{\mathrm{Cov}}\) \(\newcommand{\bfk}{\mathbf{k}}\) \(\newcommand{\idx}[1]{}\) \(\newcommand{\xdi}{}\)

6.5. Application: linear-Gaussian models and Kalman filtering#

In this section, we illustrate the use of linear-Gaussian models for object tracking. We first give some background.

6.5.1. Multivariate Gaussians: marginals and conditionals#

We will need the marginal and conditional densities of a multivariate Gaussian. For this purpose, we require various formulas and results about block matrices.

Properties of block matrices Recall that block matrices have a convenient algebra that mimics the usual matrix algebra. Consider a square block matrix with the same partitioning of the rows and columns, that is,

where \(A \in \mathbb{R}^{n \times n}\), \(A_{ij} \in \mathbb{R}^{n_i \times n_j}\) for \(i, j = 1, 2\) with the condition \(n_1 + n_2 = n\). Then it is straightforward to check (try it!) that the transpose can be written as

In particular, if \(A\) is symmetric then \(A_{11} = A_{11}^T\), \(A_{22} = A_{22}^T\) and \(A_{21} = A_{12}^T\).

EXAMPLE: For instance, consider the \(\mathbf{z} = (\mathbf{z}_1, \mathbf{z}_2)\), where \(\mathbf{z}_1 \in \mathbb{R}^{n_1}\) and \(\mathbf{z}_2 \in \mathbb{R}^{n_2}\). We want to compute the quadratic form

where \(A_{ij} \in \mathbb{R}^{n_i \times n_j}\) for \(i,j = 1, 2\) with the conditions \(n_1 + n_2 = n\), and \(A_{11}^T = A_{11}\) and \(A_{22}^T = A_{22}\).

We apply the block matrix product formula twice to get

\(\lhd\)

EXAMPLE: Let \(A_{ii} \in \mathbb{R}^{n_i \times n_i}\) for \(i = 1, 2\) be invertible. We claim that

The matrix on the right-hand side is well-defined by the invertibility of \(A_{11}\) and \(A_{22}\). We check the claim using the formula for matrix products of block matrices. The matrices above are block diagonal.

Indeed, we obtain

and similarly for the other direction. \(\lhd\)

EXAMPLE: Let \(A_{21} \in \mathbb{R}^{n_2 \times n_1}\). Then we claim that

A similar formula holds for the block upper triangular case. In particular, such matrices are invertible (which can be proved in other ways, for instance through determinants).

It suffices to check:

Taking a transpose gives a similar formula for the block upper triangular case. \(\lhd\)

Inverting a block matrix We will need a classical formula for inverting a block matrix. We start with the concept of a Schur complement.

DEFINITION (Schur Complement) \(\idx{Schur complement}\xdi\) Consider the matrix \(B \in \mathbb{R}^{n \times n}\) in block form

where \(B_{11} \in \mathbb{R}^{n_1 \times n_1}\), \(B_{22} \in \mathbb{R}^{n - n_1 \times n - n_1}\), \(B_{12} \in \mathbb{R}^{n_1 \times n - n_1}\), and \(B_{21} \in \mathbb{R}^{n - n_1 \times n_1}\). Then, provided \(B_{22}\) is invertible, the Schur complement of the block \(B_{22}\) is defined as the matrix

Similarly, provided \(B_{11}\) is invertible,

\(\natural\)

LEMMA (Inverting a Block Matrix) \(\idx{inverting a block matrix lemma}\xdi\) Consider the matrix \(B \in \mathbb{R}^{n \times n}\) in block form as

where \(B_{11} \in \mathbb{R}^{n_1 \times n_1}\), \(B_{22} \in \mathbb{R}^{n - n_1 \times n - n_1}\), \(B_{12} \in \mathbb{R}^{n_1 \times n - n_1}\), and \(B_{21} \in \mathbb{R}^{n - n_1 \times n_1}\). Then, provided \(B_{22}\) is invertible,

Alternatively, provided \(B_{11}\) is invertible,

\(\flat\)

Proof idea: One way to prove this is to multiply \(B\) and \(B^{-1}\) and check that the identity matrix comes out (try it!). We give a longer proof that provides more insight into where the formula is coming from.

The trick is to multiply \(B\) on the left and right by carefully chosen block triangular matrices with identity matrices on the diagonal (which we know are invertible by a previous example) to produce a block diagonal matrix with invertible matrices on the diagonal (which we know how to invert by a previous example).

Proof: We only prove the first formula. The proof is a calculation based on the formula for matrix products of block matrices. We will need that, if \(C\), \(D\), and \(E\) are invertible and of the same size, then \((CDE)^{-1} = E^{-1} D^{-1} C^{-1}\) (check it!).

We make a series of observations.

1- Our first step is to get a zero block in the upper right corner using an invertible matrix. Note that (recall that the order of multiplication matters here!)

2- Next we get a zero block in the bottom left corner. Starting from the final matrix in the last display,

3- Combining the last two steps, we have shown that

Using the formula for the inverse of a product of three invertible matrices, we obtain

4- Rearranging and using the formula for the inverse of a block diagonal matrix, we finally get

as claimed. \(\square\)

The positive definite case In applying the inversion formula, it will be enough to restrict ourselves to the positive definite case, where the lemmas that follow guarantee the required invertibility conditions.

First:

LEMMA (Invertibility of Positive Definite Matrices) \(\idx{invertibility of positive definite matrices}\xdi\) Let \(B \in \mathbb{R}^{n \times n}\) be symmetric, positive definite. Then \(B\) is invertible. \(\flat\)

Proof: For any \(\mathbf{x} \neq \mathbf{0}\), it holds by positive definiteness that \(\mathbf{x}^T B \mathbf{x} > 0\). In particular, it must be that \(\mathbf{x}^T B \mathbf{x} \neq 0\) and therefore, by contradiction, \(B \mathbf{x} \neq \mathbf{0}\) (since for any \(\mathbf{z}\), it holds that \(\mathbf{z}^T \mathbf{0} = 0\)). The claim follows from the Equivalent Definition of Linear Independence. \(\square\)

A principal submatrix is a square submatrix obtained by removing some rows and columns. Moreover we require that the set of row indices that remain is the same as the set of column indices that remain.

LEMMA (Principal Submatrices) \(\idx{principal submatrices lemma}\xdi\) Let \(B \in \mathbb{R}^{n \times n}\) be positive definite and let \(Z \in \mathbb{R}^{n \times p}\) have full column rank. Then \(Z^T B Z\) is positive definite. In particular all principal submatrices of positive definite matrices are positive definite. \(\flat\)

Proof: If \(\mathbf{x} \neq \mathbf{0}\), then \(\mathbf{x}^T (Z^T B Z) \mathbf{x} = \mathbf{y}^T B \mathbf{y}\), where we defined \(\mathbf{y} = Z \mathbf{x}\). Because \(Z\) has full column rank and \(\mathbf{x} \neq \mathbf{0}\), it follows that \(\mathbf{y} \neq \mathbf{0}\) by the Equivalent Definition of Linear Independence. Hence, since \(B \succ 0\), we have \(\mathbf{y}^T B \mathbf{y} > 0\) which proves the first claim. For the second claim, take \(Z\) of the form \((\mathbf{e}_{m_1}\ \mathbf{e}_{m_2}\ \ldots\ \mathbf{e}_{m_p})\), where the indices \(m_1, \ldots, m_p\) are distinct and increasing. The columns of \(Z\) are then linearly independent since they are distinct basis vectors. \(\square\)

To better understand the last claim in the proof, note that

So if the \(i\)-th column of \(Z\) is \(\mathbf{e}_{m_i}\) and the \(j\)-th column of \(Z\) is \(\mathbf{e}_{m_j}\), then the rightmost summation picks up only one element, \(B_{m_i, m_j}\). In other words, \(Z^T B Z\) is the principal submatrix of \(B\) corresponding to rows and columns \(m_1, \ldots, m_p\).

KNOWLEDGE CHECK: Consider the matrices

Which of the following matrices is \(Z^T A Z\)?

a) \(\begin{pmatrix} 1 & 0\\ 6 & 1 \end{pmatrix}\)

b) \(\begin{pmatrix} 8 & 0\\ 2 & 1 \end{pmatrix}\)

c) \(\begin{pmatrix} 2 & 8 & 4 & 0\\ 3 & 2 & 0 & 1 \end{pmatrix}\)

d) \(\begin{pmatrix} 1 & 0\\ 2 & 4\\ 6 & 1\\ 3 & 0 \end{pmatrix}\)

\(\checkmark\)

LEMMA (Schur Complement) \(\idx{Schur complement lemma}\xdi\) Let \(B \in \mathbb{R}^{n \times n}\) be positive definite and write it in block form

where \(B_{11} \in \mathbb{R}^{n_1 \times n_1}\) and \(B_{22} \in \mathbb{R}^{n - n_1 \times n - n_1}\) are symmetric, and \(B_{12} \in \mathbb{R}^{n_1 \times n - n_1}\) . Then the Schur complement of the block \(B_{11}\), i.e., the matrix \(B/B_{11} := B_{22} - B_{12}^T B_{11}^{-1} B_{12}\), is well-defined, symmetric, and positive definite. The same holds for \(B/B_{22} := B_{11} - B_{12} B_{22}^{-1} B_{12}^T\). \(\flat\)

Proof: By the Principal Submatrices Lemma, \(B_{11}\) is positive definite. By the Invertibility of Positive Definite Matrices, \(B_{11}\) is therefore invertible. Hence the Schur complement is well defined. Moreover, it is symmetric since

by the symmetry of \(B_{11}\), \(B_{22}\), and \(B_{11}^{-1}\) (prove that last one!).

For a non-zero \(\mathbf{x} \in \mathbb{R}^{n_2}\), let

The result then follows from the observation that

\(\square\)

Marginals and conditionals We are now ready to derive the distribution of marginals and conditionals of multivariate Gaussians\(\idx{multivariate Gaussian}\xdi\). Recall that a multivariate Gaussian\(\idx{multivariate normal}\xdi\) vector \(\mathbf{X} = (X_1,\ldots,X_d)\) on \(\mathbb{R}^d\) with mean \(\bmu \in \mathbb{R}^d\) and positive definite covariance matrix \(\bSigma \in \mathbb{R}^{d \times d}\) has probability density function

Recall that one way to compute \(|\bSigma|\) is as the product of all eigenvalues of \(\bSigma\) (with repeats). The matrix \(\bLambda = \bSigma^{-1}\) is called the precision matrix.

Partition \(\mathbf{X}\) as the column vector \((\mathbf{X}_1, \mathbf{X}_2)\) where \(\mathbf{X}_i \in \mathbb{R}^{d_i}\), \(i=1,2\), with \(d_1 + d_2 = d\). Similarly, consider the corresponding block vectors and matrices

Note that by the symmetry of \(\bSigma\), we have \(\bSigma_{21} = \bSigma_{12}^T\). Furthemore, it can be proved that a symmetric, invertible matrix has a symmetric inverse (try it!) so that \(\bLambda_{21} = \bLambda_{12}^T\).

We seek to compute the marginals\(\idx{marginal}\xdi\) \(f_{\mathbf{X}_1}(\mathbf{x}_1)\) and \(f_{\mathbf{X}_2}(\mathbf{x}_2)\), as well as the conditional density of \(\mathbf{X}_1\) given \(\mathbf{X}_2\), which we denote as \(f_{\mathbf{X}_1|\mathbf{X}_2}(\mathbf{x}_1|\mathbf{x}_2)\), and similarly \(f_{\mathbf{X}_2|\mathbf{X}_1}(\mathbf{x}_2|\mathbf{x}_1)\).

By the multiplication rule, the joint density \(f_{\mathbf{X}_1,\mathbf{X}_2}(\mathbf{x}_1,\mathbf{x}_2)\) can be decomposed as

We use the Inverting a Block Matrix lemma to rewrite \(f_{\mathbf{X}_1,\mathbf{X}_2}(\mathbf{x}_1,\mathbf{x}_2)\) in this form and “reveal” the marginal and conditional\(\idx{conditional}\xdi\) involved. Indeed, once the joint density is in this form, by integrating over \(\mathbf{x}_1\) we obtain that the marginal density of \(\mathbf{X}_2\) is \(f_{\mathbf{X}_2}\) and the conditional density of \(\mathbf{X}_1\) given \(\mathbf{X}_2\) is obtained by taking the ratio of the joint and the marginal.

In fact, it will be easier to work with an expression derived in the proof of that lemma, specifically

We will use the fact that the first matrix on the last line is the transpose of the third one (check it!).

To evaluate the joint density, we need to expand the quadratic function \((\mathbf{x} - \bmu)^T \bSigma^{-1} (\mathbf{x} - \bmu)\) appearing in the exponential. We break this up in a few steps.

1- Note first that

and similarly for its transpose. We define

2- Plugging this back in the quadratic function, we get

Note that both terms have the same form as the original quadratic function.

3- Going back to the density, we use \(\propto\) to indicate that the expression holds up to a constant not depending on \(\mathbf{x}\). Using that the exponential of a sum is the product of exponentials, we obtain

4- We have shown that

and

In other words, the marginal density of \(\mathbf{X}_2\) is multivariate Gaussian with mean \(\bmu_2\) and covariance \(\bSigma_{22}\). The conditional density of \(\mathbf{X}_1\) given \(\mathbf{X}_2\) is multivariate Gaussian with mean \(\bmu_{1|2}(\mathbf{X}_2)\) and covariance \(\bSigma/\bSigma_{22} = \bSigma_{11} - \bSigma_{12} \bSigma_{22}^{-1} \bSigma_{12}^T\). We write this as

and

Similarly, by exchanging the roles of \(\mathbf{X}_1\) and \(\mathbf{X}_2\), we see that the marginal density of \(\mathbf{X}_1\) is multivariate Gaussian with mean \(\bmu_1\) and covariance \(\bSigma_{11}\). The conditional density of \(\mathbf{X}_2\) given \(\mathbf{X}_1\) is multivariate Gaussian with mean \(\bmu_{2|1}(\mathbf{X}_1) = \bmu_2 + \bSigma_{21} \bSigma_{11}^{-1} (\mathbf{X}_1 - \bmu_1)\) and covariance \(\bSigma/\bSigma_{11} = \bSigma_{22} - \bSigma_{12}^T \bSigma_{11}^{-1} \bSigma_{12}\).

KNOWLEDGE CHECK: Suppose \((X_1, X_2)\) is a bivariate Gaussian with mean \((0,0)\), variances \(2\) and correlation coefficient \(-1/2\). Conditioned on \(X_2 = 1\), what is the mean of \(X_1\)?

a) \(1/2\)

b) \(-1/2\)

c) \(1\)

d) \(-1\)

\(\checkmark\)

6.5.2. Kalman filter#

We consider a stochastic process\(\idx{stochastic process}\xdi\) \(\{\bX_t\}_{t=0}^T\) (i.e., a collection of random vectors – often indexed by time) with state space \(\S = \mathbb{R}^{d_0}\) of the following form

where the \(\bW_t\)s are i.i.d. \(N_{d_0}(\mathbf{0}, Q)\) and \(F\) and \(Q\) are known \(d_0 \times d_0\) matrices. We denote the initial state by \(\bX_0 \sim N_{d_0}(\bmu_0, \bSigma_0)\). We assume that the process \(\{\bX_t\}_{t=1}^T\) is not observed, but rather that an auxiliary observed process \(\{\bY_t\}_{t=1}^T\) with state space \(\S = \mathbb{R}^{d}\) satisfies

where the \(\bV_t\)s are i.i.d. \(N_d(\mathbf{0}, R)\) and \(H \in \mathbb{R}^{d \times d_0}\) and \(R \in \mathbb{R}^{d \times d}\) are known matrices. This is an example of a linear-Gaussian system\(\idx{linear-Gaussian system}\xdi\) (also known as linear-Gaussian state space model).

Our goal is to infer the unobserved states given the observed process. Specifically, we look at the filtering problem. Quoting Wikipedia:

The task is to compute, given the model’s parameters and a sequence of observations, the distribution over hidden states of the last latent variable at the end of the sequence, i.e. to compute P(x(t)|y(1),…,y(t)). This task is normally used when the sequence of latent variables is thought of as the underlying states that a process moves through at a sequence of points of time, with corresponding observations at each point in time. Then, it is natural to ask about the state of the process at the end.

Key lemma Given the structure of the linear-Gaussian model, the following lemma will play a key role.

LEMMA (Linear-Gaussian System) \(\idx{linear-Gaussian system lemma}\xdi\) Let \(\bW \sim N_{d}(\bmu, \bSigma)\) and \(\bW'|\bW \sim N_{d'}(A \,\bW, \bSigma')\) where \(A \in \mathbb{R}^{d' \times d}\) is a deterministic matrix, and \(\bSigma \in \mathbb{R}^{d \times d}\) and \(\bSigma' \in \mathbb{R}^{d' \times d'}\) are positive definite. Then, \((\bW, \bW')\) is multivariate Gaussian with mean vector

and positive definite covariance matrix

\(\flat\)

KNOWLEDGE CHECK: What are the dimensions of each block of \(\bSigma''\)? \(\checkmark\)

Proof: We have

We re-write the quadratic function in square brackets in the exponent as follows

where the last line defines \(\bLambda''\) and the second line shows that it is positive definite (why?). We have shown that \((\bW, \bW')\) is multivariate Gaussian with mean \((\bmu, A\bmu)\).

We use the Inverting a Block Matrix Lemma to invert \(\bLambda''\) and reveal the covariance matrix. (One could also compute the covariance directly; try it!) We break up \(\bLambda''\) into blocks

where \(\bLambda''_{11} \in \mathbb{R}^{d \times d}\), \(\bLambda''_{22} \in \mathbb{R}^{d' \times d'}\), and \(\bLambda''_{12} \in \mathbb{R}^{d \times d'}\). Recall that the inverse is

where here \(B = \bLambda''\).

The Schur complement is

Hence \((\bLambda'' / \bLambda''_{22})^{-1} = \bSigma\).

Moreover,

and

Plugging back into the formula for the inverse of a block matrix completes the proof. \(\square\)

Joint distribution We extend our previous notation as follows: for two disjoint, finite collections of random vectors \(\{\mathbf{U}_i\}_i\) and \(\{\mathbf{W}_j\}_j\) defined on the same probability space, we let \(f_{\{\mathbf{U}_i\}_i, \{\mathbf{W}_j\}_j}\) be their joint density, \(f_{\{\mathbf{U}_i\}_i | \{\mathbf{W}_j\}_j}\) be the conditional density of \(\{\mathbf{U}_i\}_i\) given \(\{\mathbf{W}_j\}_j\), and \(f_{\{\mathbf{U}_i\}_i}\) be the marginal density of \(\{\mathbf{U}_i\}_i\). Formally, our goal is to compute

recursively in \(t\), where we define \(\bY_{1:t} = \{\bY_1,\ldots,\bY_{t}\}\). We will see that all densities appearing in this calculation are multivariate Gaussians, and therefore keeping track of the means and covariances will suffice.

Formally, we posit that the density of the full process (with both observed and unobserved parts) is

where the description of the model stipulates that

and

for all \(t \geq 1\) and \(\bX_0 \sim N_{d_0}(\bmu_0, \bSigma_0)\). We assume that \(\bmu_0\) and \(\bSigma_0\) are known. Applying the Linear-Gaussian System Lemma inductively shows that the full process \((\bX_{0:T}, \bY_{1:T})\) is jointly multivariate Gaussian (try it!). In particular, all marginals and conditionals are multivariate Gaussians.

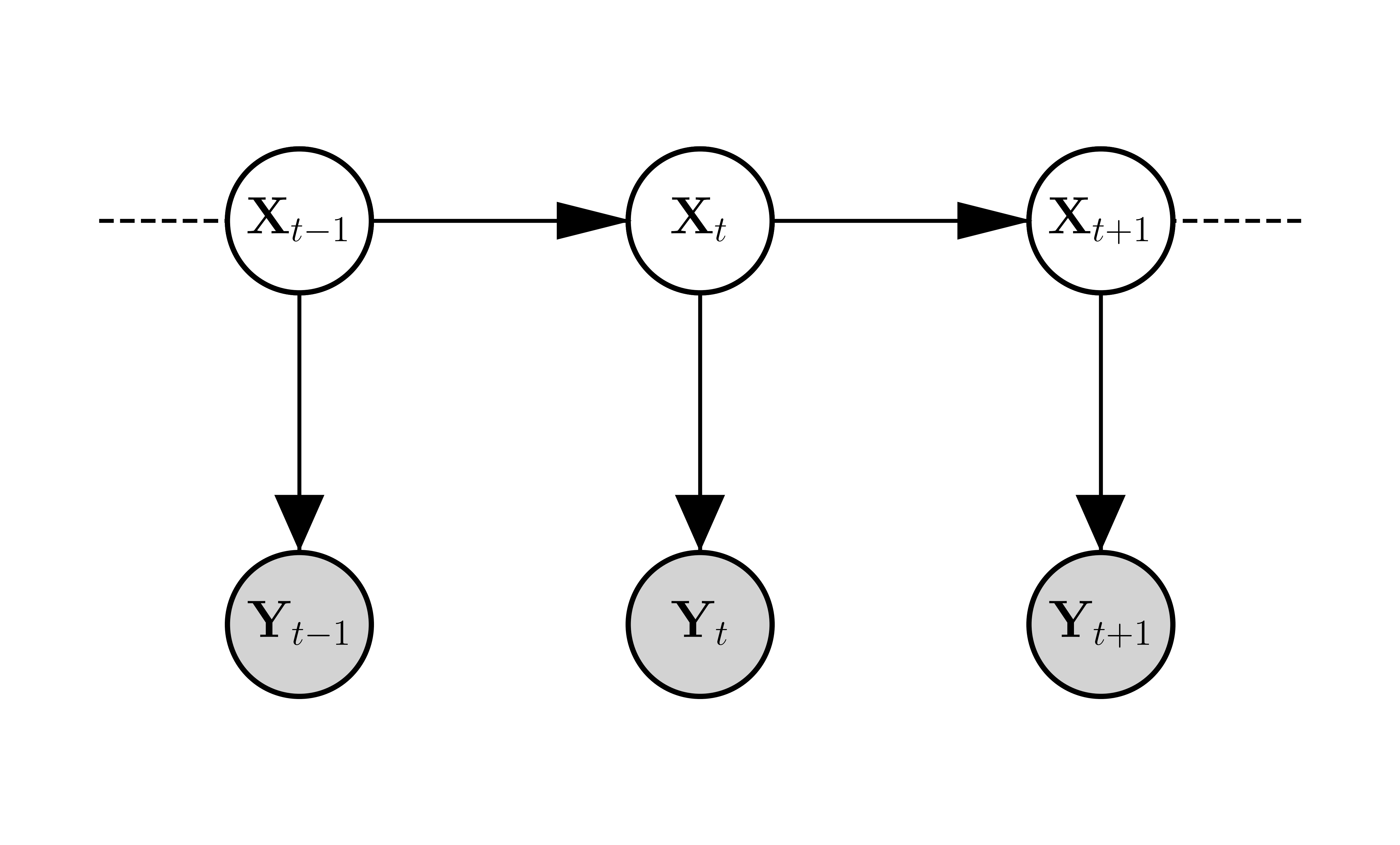

Graphically, it can be represented as follows, where as before each variable is a node and its conditional distribution depends only on its parent nodes.

Conditional independence The stipulated form of the joint density of the full process implies many conditional independence relations. We will need the following two:

1- \(\bX_t\) is conditionally independent of \(\bY_{1:t-1}\) given \(\bX_{t-1}\)

2- \(\bY_t\) is conditionally independent of \(\bY_{1:t-1}\) given \(\bX_{t}\).

We prove the first one and leave the second one as an exercise. In fact, we prove something stronger: \(\bX_t\) is conditionally independent of \(\bX_{0:t-2}, \bY_{1:t-1}\) given \(\bX_{t-1}\).

First, by integrating over \(\by_T\), then \(\bx_{T}\), then \(\by_{T-1}\), then \(\bx_{T-1}\), … , then \(\by_t\), we see that the joint density of \((\bX_{0:t}, \bY_{1:t-1})\) is

Similarly, the joint density of \((\bX_{0:t-1}, \bY_{1:t-1})\) is

The conditional density given \(\bX_{t-1}\) is then obtained by dividing the first expression by the marginal density of \(\bX_{t-1}\)

as claimed.

Algorithm \(\idx{Kalman filter}\xdi\) We give a recursive algorithm for solving the filtering problem, that is, for computing the mean vector and covariance matrix of the conditional density \(f_{\bX_{t}|\bY_{1:t}}\).

Initial step: The first compute \(f_{\bX_1|\bY_1}\). We do this through a series of observations which will generalize straightforwardly.

1- We have \(\bX_0 \sim N_{d_0}(\bmu_0, \bSigma_0)\) and \(\bX_{1}|\bX_{0} \sim N_{d_0}(F \,\bX_0, Q)\). So, by the Linear-Gaussian System Lemma, the joint vector \((\bX_0, \bX_{1})\) is multivariate Gaussian with mean vector \((\bmu_0, F \bmu_0)\) and covariance matrix

Hence the marginal density of \(\bX_{1}\) is multivariate Gaussian with mean vector \(F \bmu_0\) and covariance matrix

2- Combining the previous observation about the marginal density of \(\bX_{1}\) with the fact that \(\bY_1|\bX_1 \sim N_d(H\,\bX_1, R)\), the Linear-Gaussian System Lemma says that \((\bX_1, \bY_{1})\) is multivariate Gaussian with mean vector \((F \bmu_0, H F \bmu_0)\) and covariance matrix

Finally, define \(K_1 := P_0 H^T (H P_0 H^T + R)^{-1}\). This new observation and the conditional density formula give that

where we define

and

General step: Assuming by induction that \(\bX_{t-1}|\bY_{1:t-1} \sim N_{d_0}(\bmu_{t-1}, \bSigma_{t-1})\) where \(\bmu_{t-1}\) depends implicitly on \(\bY_{1:t-1}\) (but \(\bSigma_{t-1}\) does not), we deduce the next step. It mimics closely the initial step.

1- Predict: We first “predict” \(\bX_{t}\) given \(\bY_{1:t-1}\). We use the fact that \(\bX_{t-1}|\bY_{1:t-1} \sim N_{d_0}(\bmu_{t-1}, \bSigma_{t-1})\). Moreover, we have that \(\bX_{t}|\bX_{t-1} \sim N_{d_0}(F \,\bX_{t-1}, Q)\) and that \(\bX_t\) is conditionally independent of \(\bY_{1:t-1}\) given \(\bX_{t-1}\). So, \(\bX_{t}|\{\bX_{t-1}, \bY_{1:t-1}\} \sim N_{d_0}(F \,\bX_{t-1}, Q)\) by the Role of Independence Lemma. By the Linear-Gaussian System Lemma, the joint vector \((\bX_{t-1}, \bX_{t})\) conditioned on \(\bY_{1:t-1}\) is multivariate Gaussian with mean vector \((\bmu_{t-1}, F \bmu_{t-1})\) and covariance matrix

As a consequence, the conditional marginal density of \(\bX_{t}\) given \(\bY_{1:t-1}\) is multivariate Gaussian with mean vector \(F \bmu_{t-1}\) and covariance matrix

2- Update: Next we “update” our prediction of \(\bX_{t}\) using the new observation \(\bY_{t}\). We have that \(\bY_{t}|\bX_{t} \sim N_d(H\,\bX_{t}, R)\) and that \(\bY_t\) is conditionally independent of \(\bY_{1:t-1}\) given \(\bX_{t}\). So \(\bY_{t}|\{\bX_{t}, \bY_{1:t-1}\} \sim N_d(H\,\bX_{t}, R)\) by the Role of Independence lemma. Combining this with the previous observation, the Linear-Gaussian System lemma says that \((\bX_{t}, \bY_{t})\) given \(\bY_{1:t-1}\) is multivariate Gaussian with mean vector \((F \bmu_{t-1}, H F \bmu_{t-1})\) and covariance matrix

Finally, define \(K_{t} := P_{t-1} H^T (H P_{t-1} H^T + R)^{-1}\). This new observation and the conditional density formula give that

where we define

and

Summary: Let \(\bmu_t\) and \(\bSigma_t\) be the mean and covariance matrix of \(\bX_t\) conditioned on \(\bY_{1:t}\). The recursions for these quantities are the following:

where

This last matrix is known as the Kalman gain matrix. The vector \(\bY_{t} - H F \bmu_{t-1}\) is referred to as innovation; it compares the new observation \(\bY_{t}\) to its predicted expectation \(H F \bmu_{t-1}\) based on the previous observations. Hence, in some sense, the Kalman gain matrix\(\idx{Kalman gain matrix}\xdi\) represents the “weight” given to the observation at time \(t\) when updating the state estimate \(\bmu_t\). The solution above is known as Kalman filtering.

CHAT & LEARN Explore the concept of sequential Monte Carlo methods, also known as particle filters, as an alternative to the Kalman filter. Ask your favorite AI chatbot for an explanation and implementation of a particle filter. Try it on this dataset. (Open In Colab) \(\ddagger\)

6.5.3. Back to location tracking#

We apply Kalman filtering to location tracking. Returning to our cyborg corgi example, we imagine that we get noisy observations about its successive positions in a park. (Think of GPS measurements.) We seek to get a better estimate of its location using the method above.

Figure: Cyborg corgi (Credit: Made with Midjourney)

\(\bowtie\)

We model the true location as a linear-Gaussian system over the 2d position \((z_{1,t}, z_{2,t})_t\) and velocity \((\dot{z}_{1,t}, \dot{z}_{2,t})_t\) sampled at \(\Delta\) intervals of time. Formally,

so the unobserved dynamics are

where the \(\bW_t = (W_{1,t}, W_{2,t}, \dot{W}_{1,t}, \dot{W}_{2,t}) \sim N_{d_0}(\mathbf{0}, Q)\) with \(Q\) known.

In words, the velocity is unchanged, up to Gaussian perturbation. The position changes proportionally to the velocity in the corresponding dimension, again up to Gaussian perturbation.

The observations \((\tilde{z}_{1,t}, \tilde{z}_{2,t})_t\) are modeled as

so the observed process satisfies

where the \(\bV_t = (V_{1,t}, V_{2,t}) \sim N_d(\mathbf{0}, R)\) with \(R\) known.

In words, we only observe the positions, up to Gaussian noise.

Implementing the Kalman filter We implement the Kalman filter as described above with known covariance matrices. We take \(\Delta = 1\) for simplicity. The code is adapted from [Mur0].

We will test Kalman filtering on a simulated path drawn from the linear-Gaussian model above. The following function creates such a path and its noisy observations.

def lgSamplePath(rng, ss, os, F, H, Q, R, init_mu, init_Sig, T):

x = np.zeros((ss,T))

y = np.zeros((os,T))

x[:,0] = rng.multivariate_normal(init_mu, init_Sig)

for t in range(1,T):

x[:,t] = rng.multivariate_normal(F @ x[:,t-1],Q)

y[:,t] = rng.multivariate_normal(H @ x[:,t],R)

return x, y

NUMERICAL CORNER: Here is an example.

seed = 535

rng = np.random.default_rng(seed)

ss = 4 # state size

os = 2 # observation size

F = np.array([[1., 0., 1., 0.],

[0., 1., 0., 1.],

[0., 0., 1., 0.],

[0., 0., 0., 1.]])

H = np.array([[1., 0., 0., 0.],

[0., 1, 0., 0.]])

Q = 0.1 * np.diag(np.ones(ss))

R = 10 * np.diag(np.ones(os))

init_mu = np.array([0., 0., 1., 1.])

init_Sig = 1 * np.diag(np.ones(ss))

T = 50

x, y = lgSamplePath(rng, ss, os, F, H, Q, R, init_mu, init_Sig, T)

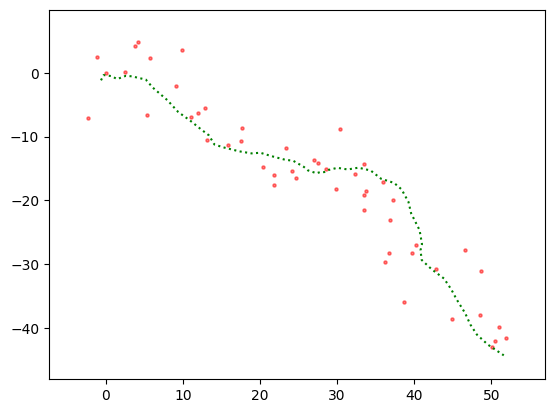

In the next plot (and throughout this section), the dots are the noisy observations. The unobserved true path is also shown as a dotted line.

Show code cell source

plt.scatter(y[0,:], y[1,:], s=5, c='r', alpha=0.5)

plt.plot(x[0,:], x[1,:], c='g', linestyle='dotted')

plt.xlim((np.min(y[0,:])-5, np.max(y[0,:])+5))

plt.ylim((np.min(y[1,:])-5, np.max(y[1,:])+5))

plt.show()

\(\unlhd\)

The following function implements the Kalman filter. The full recursion is broken up into several steps. We use numpy.linalg.inv to compute the Kalman gain matrix. Below, mu_pred is \(F \bmu_{t-1}\) and Sig_pred is \(P_{t-1} = F \bSigma_{t-1} F^T + Q\), which are the mean vector and covariance matrix of \(\bX_{t}\) given \(\bY_{1:t-1}\) as computed in the Predict step.

def kalmanUpdate(ss, F, H, Q, R, y_t, mu_prev, Sig_prev):

mu_pred = F @ mu_prev

Sig_pred = F @ Sig_prev @ F.T + Q

e_t = y_t - H @ mu_pred

S = H @ Sig_pred @ H.T + R

Sinv = LA.inv(S)

K = Sig_pred @ H.T @ Sinv

mu_new = mu_pred + K @ e_t

Sig_new = (np.diag(np.ones(ss)) - K @ H) @ Sig_pred

return mu_new, Sig_new

def kalmanFilter(ss, os, y, F, H, Q, R, init_mu, init_Sig, T):

mu = np.zeros((ss, T))

Sig = np.zeros((ss, ss, T))

mu[:,0] = init_mu

Sig[:,:,0] = init_Sig

for t in range(1,T):

mu[:,t], Sig[:,:,t] = kalmanUpdate(ss, F, H, Q, R, y[:,t], mu[:,t-1], Sig[:,:,t-1])

return mu, Sig

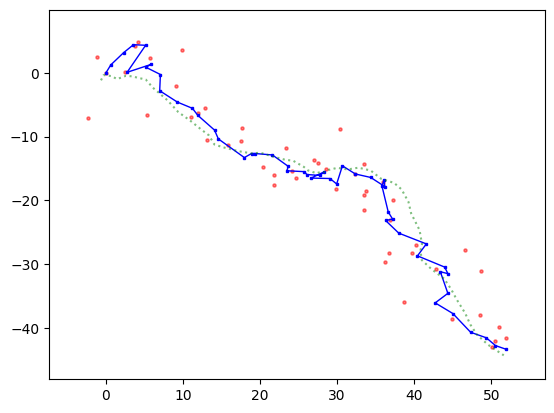

NUMERICAL CORNER: We apply this to the location tracking example. The inferred states, or more precisely their estimated mean, are in blue. Note that we also inferred the velocity at each time point, but we are not plotting that information.

Show code cell source

init_mu = np.array([0., 0., 1., 1.])

init_Sig = 1 * np.diag(np.ones(ss))

mu, Sig = kalmanFilter(ss, os, y, F, H, Q, R, init_mu, init_Sig, T)

plt.plot(mu[0,:], mu[1,:], c='b', marker='s', markersize=2, linewidth=1)

plt.scatter(y[0,:], y[1,:], s=5, c='r', alpha=0.5)

plt.plot(x[0,:], x[1,:], c='g', linestyle='dotted', alpha=0.5)

plt.xlim((np.min(y[0,:])-5, np.max(y[0,:])+5))

plt.ylim((np.min(y[1,:])-5, np.max(y[1,:])+5))

plt.show()

To quantify the improvement in the inferred means compared to the observations, we compute the mean squared error in both cases.

dobs = x[0:1,:] - y[0:1,:]

mse_obs = np.sqrt(np.sum(dobs**2))

print(mse_obs)

22.891982252201856

dfilt = x[0:1,:] - mu[0:1,:]

mse_filt = np.sqrt(np.sum(dfilt**2))

print(mse_filt)

9.778610100463018

We indeed observe a substantial reduction.

\(\unlhd\)

CHAT & LEARN The Kalman filter assumes that the parameters of the state evolution and observation models are known. Ask your favorite AI chatbot about methods for estimating these parameters from data, such as the expectation-maximization algorithm or the variational Bayes approach. \(\ddagger\)

Self-assessment quiz (with help from Claude, Gemini, and ChatGPT)

1 Which of the following is the Schur complement of the block \(B_{11}\) in the positive definite matrix \(B = \begin{pmatrix} B_{11} & B_{12} \\ B_{12}^T & B_{22} \end{pmatrix}\)?

a) \(B_{22} - B_{12}^T B_{11}^{-1} B_{12}\)

b) \(B_{11} - B_{12} B_{22}^{-1} B_{12}^T\)

c) \(B_{22}\)

d) \(B_{11}\)

2 Which of the following is true about the Schur complement \(B/B_{11}\) of the block \(B_{11}\) in a positive definite matrix \(B\)?

a) It is always symmetric.

b) It is always positive definite.

c) Both a and b.

d) Neither a nor b.

3 What is the conditional distribution of \(\mathbf{X}_1\) given \(\mathbf{X}_2\) in a multivariate Gaussian distribution?

a) \(\mathbf{X}_1 | \mathbf{X}_2 \sim N_{d_1}(\boldsymbol{\mu}_1 + \bSigma_{12}\bSigma_{22}^{-1}(\mathbf{X}_2 - \boldsymbol{\mu}_2), \bSigma_{11} - \bSigma_{12}\bSigma_{22}^{-1}\bSigma_{12}^T)\)

b) \(\mathbf{X}_1 | \mathbf{X}_2 \sim N_{d_1}(\boldsymbol{\mu}_1 - \bSigma_{12}\bSigma_{22}^{-1}(\mathbf{X}_2 - \boldsymbol{\mu}_2), \bSigma_{11} + \bSigma_{12}\bSigma_{22}^{-1}\bSigma_{12}^T)\)

c) \(\mathbf{X}_1 | \mathbf{X}_2 \sim N_{d_1}(\boldsymbol{\mu}_1 + \bSigma_{12}\bSigma_{22}^{-1}(\mathbf{X}_2 - \boldsymbol{\mu}_2), \bSigma_{11} + \bSigma_{12}\bSigma_{22}^{-1}\bSigma_{12}^T)\)

d) \(\mathbf{X}_1 | \mathbf{X}_2 \sim N_{d_1}(\boldsymbol{\mu}_1 - \bSigma_{12}\bSigma_{22}^{-1}(\mathbf{X}_2 - \boldsymbol{\mu}_2), \bSigma_{11} - \bSigma_{12}\bSigma_{22}^{-1}\bSigma_{12}^T)\)

4 In a linear-Gaussian system, which of the following is true about the conditional independence relationships?

a) \(\mathbf{X}_t\) is conditionally independent of \(\mathbf{Y}_{1:t-1}\) given \(\mathbf{X}_{t-1}\).

b) \(\mathbf{Y}_t\) is conditionally independent of \(\mathbf{Y}_{1:t-1}\) given \(\mathbf{X}_t\).

c) \(\mathbf{X}_t\) is conditionally independent of \(\mathbf{X}_{t-2}\) given \(\mathbf{X}_{t-1}\).

d) All of the above.

5 In the Kalman filter, what does the Kalman gain matrix \(K_t\) represent?

a) The covariance matrix of the state estimate at time \(t\).

b) The covariance matrix of the observation at time \(t\).

c) The weight given to the observation at time \(t\) when updating the state estimate.

d) The weight given to the previous state estimate when predicting the current state.

Answer for 1: a. Justification: The text defines the Schur complement of \(B_{11}\) as \(B / B_{11} := B_{22} - B_{12}^T B_{11}^{-1} B_{12}\).

Answer for 2: c. Justification: The text states “the Schur complement of the block \(B_{11}\), i.e., the matrix \(B/B_{11} := B_{22} - B_{12}^T B_{11}^{-1} B_{12}\), is symmetric and positive definite.”

Answer for 3: a. Justification: The conditional distribution of \(\mathbf{X}_1\) given \(\mathbf{X}_2\) is derived in the text as \(\mathbf{X}_1 | \mathbf{X}_2 \sim N_{d_1}(\boldsymbol{\mu}_1 + \bSigma_{12}\bSigma_{22}^{-1}(\mathbf{X}_2 - \boldsymbol{\mu}_2), \bSigma_{11} - \bSigma_{12}\bSigma_{22}^{-1}\bSigma_{12}^T)\).

Answer for 4: d. Justification: The text explicitly states these conditional independence relationships.

Answer for 5: c. Justification: The text defines \(K_t := P_{t-1} H^T (H P_{t-1} H^T + R)^{-1}\) as the Kalman gain matrix, which is used to update the state estimate as \(\boldsymbol{\mu}_t := F \boldsymbol{\mu}_{t-1} + K_t (\mathbf{Y}_t - H F \boldsymbol{\mu}_{t-1})\), where \(K_t\) weighs the innovation \((\mathbf{Y}_t - H F \boldsymbol{\mu}_{t-1})\).